Contents

ToggleIntroduction

Artificial intelligence is one of the most exciting technological fields of our time. More powerful systems are continuously emerging, led by pioneers like OpenAI. With the introduction of the new Turbo variants of its successful models, GPT-4 and GPT-3.5, the company has set new standards and significantly raised the bar for the utilization of AI in language processing once again. These improvements encompass core areas such as context analysis, speed, multimodality, and cost.

Up-to-date Data Foundation for Contemporary Language Understanding

One of the outstanding features of GPT-4 Turbo is its training based on exceptionally current data. Compared to previous models, this enables the AI to significantly better comprehend and process contemporary language and topics. This is a significant advantage for many use cases that need to adapt to the latest context to remain relevant. Instead of being trained with data up to 2021, GPT-4 Turbo was trained with information up to April 2023.

Massively expanded context scope for more precise results.

From a technical perspective, the massively expanded context scope that GPT-4 Turbo can analyze is groundbreaking. It can now consider up to 131,072 language tokens, a multiple of the previous GPT-4 version. Tokens are the smallest units of information in the form of words or word parts. With this significantly larger context, queries to the AI can be answered much more precisely. The software simply has more data available to understand relationships and provide the best possible response.

Simplified App Development through "Function Calling"

As another major breakthrough, the so-called "Function Calling" emerges. With this, GPT-4 Turbo can execute multiple different functions in parallel with just a single command from the user. In practice, this greatly simplifies working with the AI, as it reduces the need for numerous separate API calls. Instead of formulating many individual commands, the desired functionality can often be covered with a single instruction.

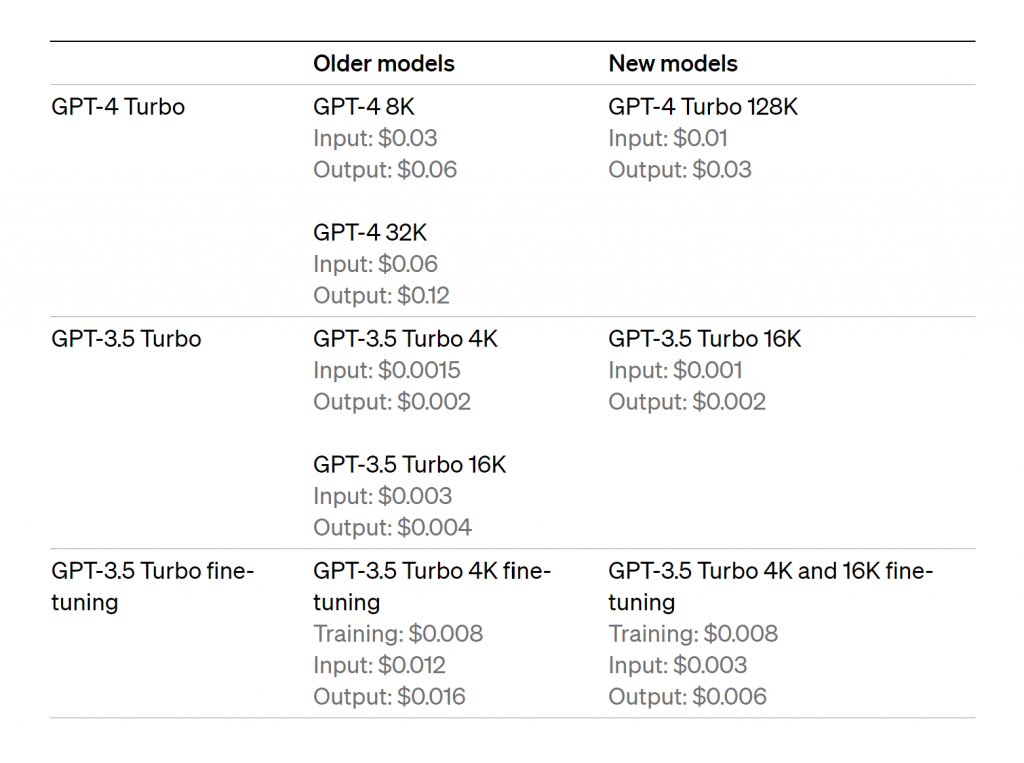

Up to 90% cost reduction through optimized pricing.

In financial terms, the significantly reduced usage fees are likely to attract attention. Depending on the type of query to GPT-4 Turbo, fees now amount to only about 1 cent per 1,000 analyzed tokens. For comparison, with the previous GPT-4 models, prices ranged between 3 and 12 cents per version. These savings of up to 90% are particularly noticeable for companies that extensively utilize AI solutions. The utilization of these immensely powerful language models becomes even more attractive from a business perspective.

OpenAI has significantly improved and reduced the price of the existing GPT-3.5 Turbo version in the course of these new releases. The increased context scope and faster parallel function calls make this model even more powerful.

Multimodal capabilities for cross-media applications

Noteworthy is the new multimodal capability of GPT-4 Turbo. Previously, the systems were limited to text input and output. Now, GPT-4 Turbo can, for the first time, process text, images, and speech across different media simultaneously. This opens up entirely new types of applications that were not achievable with previous AI language models.

To realize multimodality, state-of-the-art systems for image generation and speech recognition were integrated. Dall-E 3 is used for graphics, and Whisper software is used for speech-to-text. The combined analysis and production of different media enable fascinating new use cases. Examples include realistic dialogue systems with voice output and matching visualizations or automated multimedia storytelling.

Conclusion

With the Turbo versions of its successful models, GPT-4 and GPT-3.5, OpenAI has once again raised the bar in AI development. The improvements in context analysis, speed, multimodality, and cost-efficiency will enable a wide range of innovative applications and boost productivity in businesses. It remains exciting to see what further potentials can be unlocked in the future. AI pioneers like OpenAI are driving development at a rapid pace.

FAQ

How do GPT-4 and GPT-4 Turbo differ?

GPT-4 Turbo has been trained with significantly more recent data, allowing it to better understand newer topics. Additionally, the analyzable context size has increased by a multiple, with up to 131,072 tokens, compared to the previous GPT-4.

What is the cost savings from GPT-4 Turbo?

Depending on the type of request, prices have decreased by up to 90% with GPT-4 Turbo, now costing only about 1 cent per 1,000 tokens. This makes the powerful AI very cost-effective.

What new use cases does multimodality enable?

The combination of text, images, and speech in one model allows, for example, more realistic dialogue systems, automated multimedia storytelling, or new possibilities for VR.

How can developers customize the models?

Special tools like "fine-tuning" can be used to optimize the models for specific use cases. However, customization for GPT-4 requires more effort.

Are there ethical risks in the use of AI?

Possible issues include biases in training data or misuse for disinformation. Developers should consider such aspects from the beginning.

more links: